Sync test screenshot

It’s not that easy to synchronize programmed animation and dynamic sound in Flash. To have something happen on screen at exactly the same time a sound starts to play.

I thought about it a bit and came up with two different scenarios where audio and visuals need to be synchronized:

- The first is where a sound plays and something visual on screen happens to accompany that audio event.

- The second is the other way around. Something happens on screen that should be accompanied by a sound.

In the first case the animation is the initiator, in the second case the sound. For the second case I included a Flash example here with the source code available.

1. Animation follows audio

This is the simpler scenario. For example: There’s an online drum machine built in Flash that plays a drum sound on every beat. A kick drum in a techno track for example. On the machine’s interface there’s a small red LED light (a MovieClip) that should blink each time the drum hits.

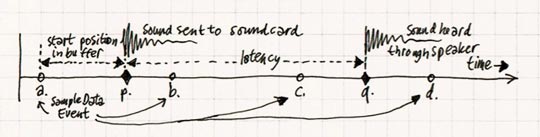

The drum machine has a steady rhythm set in BPM (Beats Per Minute). So once the machine has started it’s always known when the next drum hit will happen. Here’s a graph of the events:

- (a), (b), (c) … are the moments in time when a SampleDataEvent is received and the buffer is filled with samples.

- (p) is where the drum hit should happen. Because the drum BPM and buffer refresh rate are completely independent the drum hit will almost always start somewhere between two buffer refreshes.

- (q) is where the drum sound is actually heard through the speakers. There is a delay between when the samples are sent out from Flash to when they are played through the speakers. This is the latency; the time needed for the soundcard to process the samples and send them as analog audio to the soundcard’s outputs. Latency is the duration from (p) to (q).

To synchronize the two it’s a simple addition. Duration (a)(p) plus duration (p)(q).

- (a)(p) is the time from the first sample in the buffer to the first sample of the drum hit (and to convert this duration in samples to a duration in milliseconds it must be divided by 44.1 (sample rate / 1000)).

- (p)(q) is the latency. That’s ((SampleDataEvent.position / 44.1) – SoundChannelObject.position). (See the AS3.0 Language reference on SampleDataEvent.)

- The result is the total delay in milliseconds. A Timer could be started when the buffer is filled at (a) so that the LED blinks at the frame nearest (q).

2. Audio follows animation

Download the source code of this example.

This second scenario is a bit more complicated. Hopefully someone else has a simpler solution. But until then this is the solution I use.

Click the ‘Start’ button in the example above: Balls bounce on the floor and each time one of them hits the floor a ‘boing’ sound plays. A simple implementation of Newton’s laws of motion.

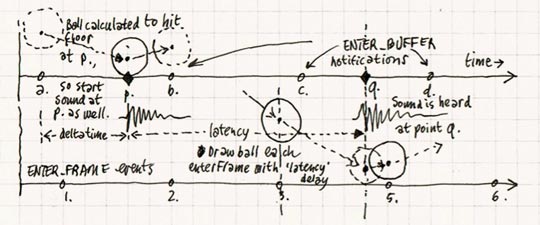

A graph of the events:

- (a), (b), (c) … are the times when a buffer is requested and filled. Same as in the previous example.

- (1), (2), (3) … are frames where Flash updates the screen (Event.ENTER_FRAME).

- (p) is where the ball hitting the floor is calculated to happen.

- (q) is where the sound is heard.

- (r) is where the ball hitting the floor is drawn on screen.

What’s going on?

The trick is to delay drawing the animation for a ‘latency’ amount of time.

- First calculate where the ball WOULD be (but NOT draw it).

- Fire a sound if the calculation shows the ball would hit the floor.

- Draw the animation with a delay determined by the latency.

That is the principle of how it works. The actual implementation is a bit more involved:

- At (a), (b), (c) … (each SampleDataEvent.SAMPLE_DATA) the position of the ball is calculated. The current time and position of the ball are stored in a Vector called _history.

- If the calculation shows the ball will hit the floor during the current buffer (at (p)):

- The time from the start of the buffer till the hit is calculated (a)(p).

- The sound is started at time offset (a)(p) in the buffer.

- Meanwhile at (1), (2), (3) … (at each Event.ENTER_FRAME) the screen is updated.

- To know where to draw the ball the _history of the ball is used.

- First get the current time and subtract the latency. This is the moment in the past that should be drawn on screen.

- Search in the balls _history for that moment in the past.

- It’s very unlikely however that the position at exactly the required millisecond is stored. More likely somewhere in between two stored moments in time. So get the closest positions before and after the requested time and use linear interpolation to draw the ball at the right position between the ‘before’ and ‘after’ records.

So what’s going on?

I’m afraid this explanation might be more complex than the actual code. So I’ll add some of the relevant ActionScript.

To understand it you should know that there is an AudioDriver class that listens to the SampleDataEvent. Each SampleDataEvent it sends an ENTER_BUFFER notification (this is PureMVC) to notify all Ball instances to store their position. To do this the function enterBuffer is called on each Ball instance:

public function enterBuffer() : void

{

var hit : Boolean;

if(_isDragging)

{

_vy = 0;

}

else

{

_vy += _gravity;

_y += _vy;

/** If the ball hits the floor. */

if(_y > _floorY - _radius)

{

var distance : Number = _vy;

var overlap : Number = _y + _radius - _floorY;

_hitAlpha = (distance - overlap) / distance;

_y = _floorY - _radius - overlap;

_vy *= -1;

hit = true;

dispatchEvent(new Event(Ball.BOUNCE));

}

}

/** Store as data for later use. */

_history.splice(0, 0, new BallVO(_x, _y, _vx, _vy, hit));

/** Keep maximum 100 history items so the maximum latency is buffer * 100. */

if(_history.length > 100)

{

var n : int = _history.length;

while(--n > 99)

{

_history.pop();

}

}

}

This function does what was expected: A new position is calculated and stored in a BallVO value object, the BallVO is added to the _history and a bit of maintenance is done: Keep _history’s length within bounds.

The only other thing is the _isDragging Boolean. Balls can be dragged to a new position.

The AudioDriver also sends an ENTER_FRAME notification. This is just like a regular Event.ENTER_FRAME from a DisplayObject except that it has a AudioStreamInfoVO value object as an argument. The AudioStreamInfoVO has a ‘latencyInMS’ property that holds (as you’ll have guessed) the latency in milliseconds that the animation should be delayed.

public function enterFrame(infoVO : AudioStreamInfoVO) : void

{

graphics.clear();

graphics.lineStyle(2, 0xFFFFFF);

graphics.beginFill(0xBBBBBB, 0.1);

if(_isDragging)

{

graphics.drawCircle(_x, _y, _radius);

}

else

{

var time : Number = new Date().getTime() - infoVO.latencyInMS;

var i : int = -1;

var n : int = _history.length;

while(++i < n)

{

/** Get the data from immediately before and after 'time'. */

if(_history[i].time < time && i > 0)

{

var before : BallVO = _history[i];

var after : BallVO = _history[i - 1];

var alpha : Number = (time - before.time) / (after.time - before.time);

/** If a bounce on the floor happened between before and after. */

if(before.vy > 0 && after.vy < 0)

{

/** Linear interpolation to correct the vertical position. */

var maxY : Number = _floorY - _radius;

var partBeforeBounce : Number = maxY - before.y;

var partAfterBounce : Number = maxY - after.y;

var bounceAlpha : Number = partBeforeBounce / (partBeforeBounce + partAfterBounce);

if(alpha < bounceAlpha)

{

var y : Number = before.y + (before.vy * bounceAlpha);

}

else

{

y = after.y + (before.vy * (1 - bounceAlpha));

}

}

else

{

/** Regular linear interpolation for a ball in mid flight. */

y = before.y + (alpha * (after.y - before.y));

}

if(before.hit) graphics.beginFill(0xFFFFFF);

graphics.drawCircle(_x, y, _radius);

break;

}

}

}

}

So in this function there’s a variable ‘time’ which holds the moment in time in the past that must be drawn, ‘before.time’ and ‘after.time’ and a resulting ‘alpha’ which is the interpolation value between before and after. The resulting ball position calculation is this:

y = before.y + (alpha * (after.y – before.y));

Isaac Newton

The new thing here is that if the ball hits the floor somewhere between before and after (if(before.vy > 0 && after.vy < 0)), then the regular linear interpolation doesn’t give the right visual result and some extra code is necessary. But that’s another story.

All this code is not optimized at all. In this example I wanted to keep it all as clear as possible to read and understand.

Hi Tendouji, I hope you could find your answers in the source code. It’s difficult to explain clearer in English than in ActionScript :-).

Anyway, about these durations:

(p)(q) is the easy one. The audio system latency, the time it takes your audio driver and soundcard to process the samples and present them as sound on the soundcard’s audio output. This is just a simple calculation: You need two values: The position property of the flash.events.SampleDataEvent that is passed to your SampleData event listener and the position property of flash.media.SoundChannel object that was returned by the play() method of your flash.media.Sound object. Then it’s this calculation: ((sampleDataEventInstance.position / 44.1) – soundChannelInstance.position). See Adobe’s SampleDataEvent reference.

(a)(p) is internal to your Flash application: SampleDataEvents are received at regular intervals. But if you have calculated when a sound has to start playback it would be a coincidence that that starttime would be the exact moment, on the millisecond, that a sampledata event is received. More likely the sound should start somewhere in between. The duration (a)(p) is that time difference. So if the sound you want to play should start somewhere between the current and the next sampledata event then the first sample of the sound should not be on position zero of the buffer but on position (a)(p).

Hope this helps, Maybe a clearer answer can be found elsewhere.

Thanks. The programming is the difficult part for me too, but fun at the same time. Depending what your goal is it might be worthwhile to have a look at Processing (www.processing.org). I think it’s easier than Actionscript.

Hi,

I am working on the sample no. 1, animation follows audio. But can you explain further on the technical code on how to write this? I am a bit lost in getting the duration (a)(p) plus duration (p)(q).

:/

Great post. I can think of a lot of reasons to synch audio and visual on a web site.

The programming would be the difficult part for me, I come from the music and sound area but maybe it is worth the dive..

Thank you.

Hi Wouter,

I just discovered your blog. You’ve written some excellent articles and examples and got me excited about programming audio in Flash again :) I’m just about to start an audio project where I need the animation to follow the sound and it’s reassuring to find that you have similar solutions to the ones I was thinking of. Thanks, and keep up the good work.